Investing in the intelligence economy: AI opportunities across global sectors

Artificial intelligence (AI) is driving a structural shift in the global economy, powering innovation across industries, accelerating infrastructure investment, and creating new competitive dynamics for long-term investors.

Fundamental Growth and Core Equity team

Why AI is a foundational investment theme, not a fleeting trend

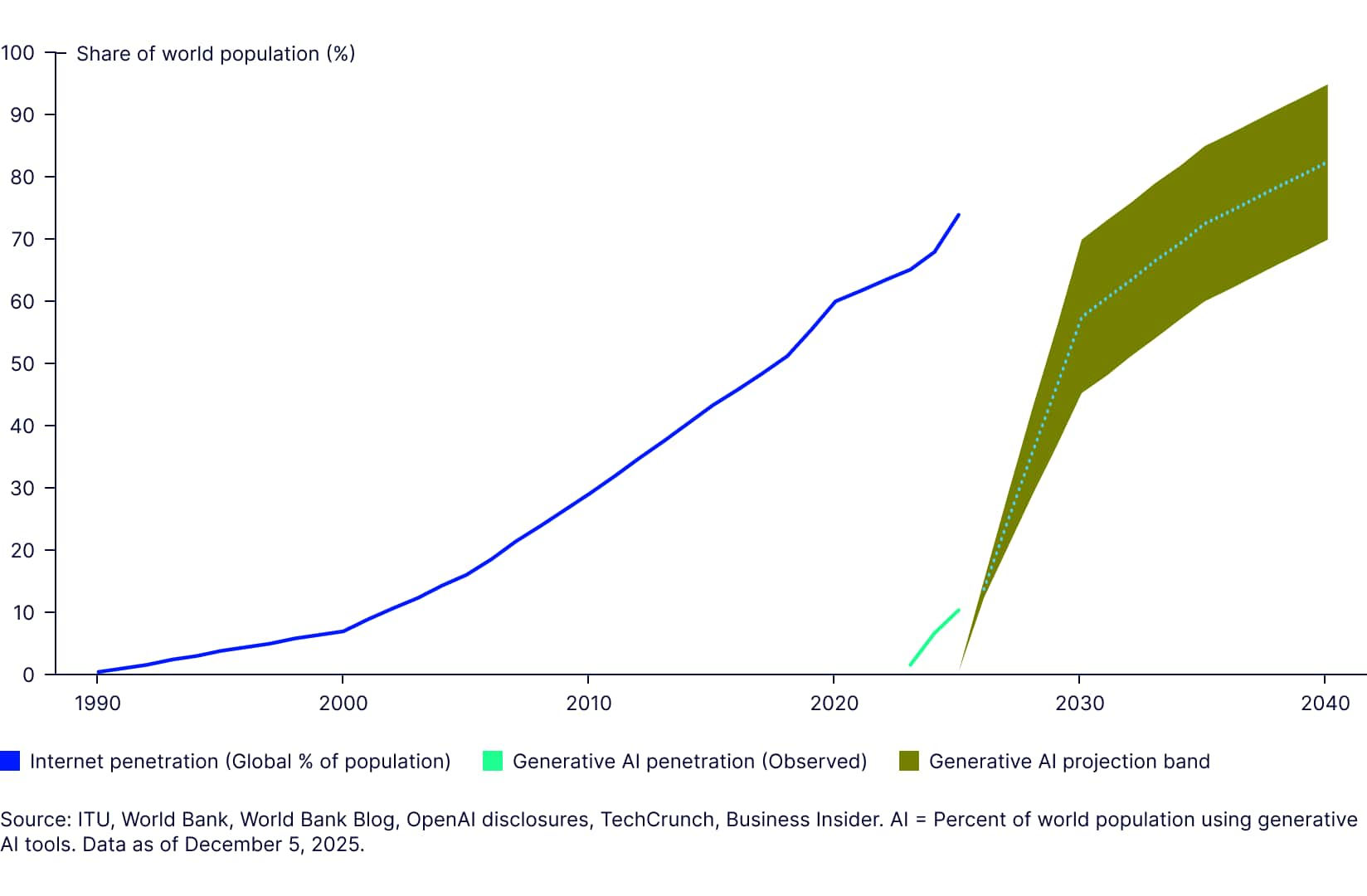

Artificial intelligence isn’t just a tech buzzword—it’s a structural force reshaping the global economy. Much like the internet in the 1990s, AI is a general-purpose technology with the potential to transform industries, redefine productivity, and create entirely new business models.

While headlines often focus on short-term hype, the real story is unfolding beneath the surface: AI is becoming embedded in the infrastructure of enterprise software, financial services, and beyond. For long-term investors, this isn’t a moment to chase trends—it’s a moment to understand the architecture of future growth.

What makes AI a structural shift in the global economy?

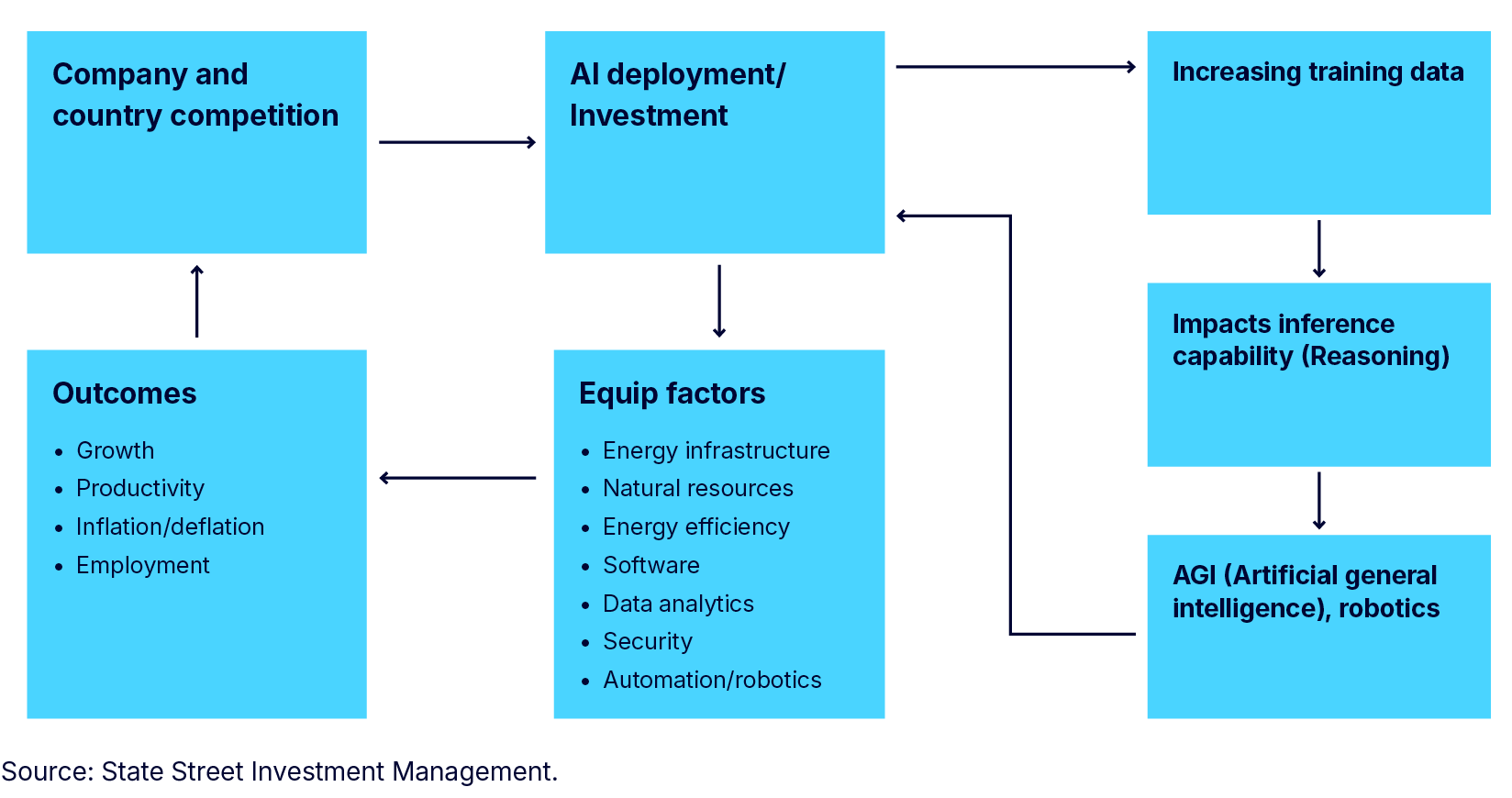

While in certain respects AI is an extension of ongoing technological evolution, the stakes both geopolitically and amongst corporations are potentially much greater this time. Because the architecture of AI tends to favor scale, it’s looking like a “winner takes all” world evidenced by the massive investments taking place in AI infrastructure and large language models (LLMs).

At a macro level, AI is influencing labor markets, capital allocation, and even monetary policy. Automation is shifting the demand for certain skill sets, while AI-driven insights are changing how investors and businesses deploy capital. Central banks and regulators are beginning to grapple with the implications of AI on inflation, employment, and systemic risk. Governments are racing to secure leadership in AI capabilities, recognizing its role in defense, intelligence, and industrial competitiveness.

Figure 1: The AI arms race

At the same time, corporations are pouring billions into AI infrastructure, particularly into computer power and LLMs, which require immense capital and data scale to train and deploy effectively. For investors, capturing the growth of AI isn’t just about picking the right companies—it’s about understanding the structural forces that will define competitive moats and economic influence for decades to come.

How AI mirrors the internet’s early investment opportunity

Like the internet in the 1990s, AI is early in its monetization curve but further ahead in its infrastructure buildout. There will be times when stocks get ahead of fundamentals or economic cycles pressure earnings, but don’t confuse that with the inevitable developments which will dramatically change the world in the next 25 years and beyond.

Like the dot-com era, much of how this will play out is still unknown. When Amazon started as a bookstore, very few could envision the dominance, variety, and delivery speed we take for granted today.

US e-commerce sales rose from around $27 billion in 2000 to $1.2 trillion by 2024.1 Amazon has been a clear beneficiary, producing an annual return of 17.6% since 2000,2 outperforming the S&P 500 by 9.9% annually,3 while continuing to leverage its scale to invest in AI so that its ecosystem of offerings will benefit in the future. Amazon saw some heavy drawdowns along the way, but the 25-year return is still stunning.

Figure 2: US e-commerce sales growth has soared past brick-and-mortar (2000-2024)

Compound annual growth rate of US e-commerce sales 4

Compound annual growth rate of US brick-and-mortar sales 5

Why long-term investors should look beyond the hype

AI isn’t just an industry—it’s a substrate for innovation across the economy. In many industries, AI will soon be as essential and expected as having a website. Companies that don’t adopt it may be seen as outdated or uncompetitive. Today, only 35% of companies have deployed AI in at least one function,6 but 42% are considering AI adoption in the near future.7

Edge computing, which brings data processing closer to where data is generated, using sensors on your personal device as opposed to centralized cloud data centers, is still in the early stages. Inferencing—the stage after training when a model uses what it learned to make predictions or decisions on new data—is just beginning.

Particularly, reasoning models that use chain-of-thought and multi-step inference—reasoning processes that break down complex problems into a series of connected logical steps—are still new. While each token (word or chunk of text) will increasingly use less computing power as efficiencies improve, serving hundreds of billions of users at scale can make inference 10-100 times more demanding than training the model in the first place.

Physical AI, which understands the real world and can be used in fields like robotics and autonomous driving, is also relatively new, with investment ramping rapidly. These applications will require even more processing power, energy, and infrastructure than today’s generative AI use cases. For long-term investors, this means the real opportunity lies not in chasing short-term hype, but in identifying the foundational technologies, platforms, and enablers that will underpin AI’s evolution.

Figure 3: Consumer adoption curves projection, internet vs. AI (1990-2040)

The evolution of AI: From algorithms to agents

AI has come a long way from rule-based algorithms and narrow task automation. Today, we’re entering a new phase—one defined by intelligent agents capable of reasoning, adapting, and interacting with their environments.

This shift marks a fundamental evolution: from tools that execute instructions to systems that can make decisions, learn from feedback, and operate autonomously.

A very brief history of AI: From symbolic logic to generative models

AI's journey began with early ideas about machines mimicking human thought. In 1950, English mathematician and computer scientist Alan Turing posed the question “Can machines think?” in his paper "Computing Machinery and Intelligence."8

AI’s formal birth occurred in 1956 at the Dartmouth Summer Research Project on Artificial Intelligence, where the term "artificial intelligence" was coined. This workshop, led by American computer scientist John McCarthy and others, explored how machines could simulate aspects of intelligence.

“An attempt will be made to find how to make machines use language, form abstractions and concepts, solve kinds of problems now reserved for humans, and improve themselves. We think that a significant advance can be made in one or more of these problems if a carefully selected group of scientists work on it together for a summer.”9

The aim of the conference, as McCarthy put in the conference proposal, was to find out “how to make machines use language, form abstractions and concepts, [and] solve kinds of problems now reserved for humans.”10 Evidently, it took decades to develop the compute power necessary for this.

In the early days of AI, researchers began exploring symbolic logic. These were systems built to follow human-defined rules and logical structures to simulate reasoning. It began with investigating ways to perform tasks only humans were considered to have enough knowledge to do, like playing checkers or chess. Now, AI has evolved into systems that can not only perceive and classify data but also generate content, reason through complex problems, and increasingly act autonomously.

Figure 4: Timeline of AI’s development

1950

Alan Turing publishes “Computing Machinery and Intelligence,” introducing the concept of the Turing Test11

1956

The term “artificial intelligence” is coined at the Dartmouth Conference, organized by John McCarthy12

1966

ELIZA, an early natural language processing chatbot, is developed by Joseph Weizenbaum at MIT13

1972

SHRDLU, a program capable of understanding and interacting in a virtual environment, is created by Terry Winograd14

1997

IBM’s Deep Blue defeats world chess champion Garry Kasparov15

2012

AlexNet achieves a breakthrough in image recognition using deep convolutional neural networks16

2014

Generative Adversarial Networks (GANs) are introduced by Ian Goodfellow17

2016

Google DeepMind’s AlphaGo defeats Go champion Lee Sedol, showcasing reinforcement learning18

2017

Google researchers introduced the Transformer architecture in their landmark paper “Attention Is All You Need,” laying the foundation for many modern AI models19

2022

OpenAI releases ChatGPT, popularizing LLMs and generative AI20

2024

OpenAI unveils “o1,” an agentic AI system capable of autonomous web navigation and planning21

2025

Many LLM’s launch new releases moving Agentic AI from hype to enterprise reality

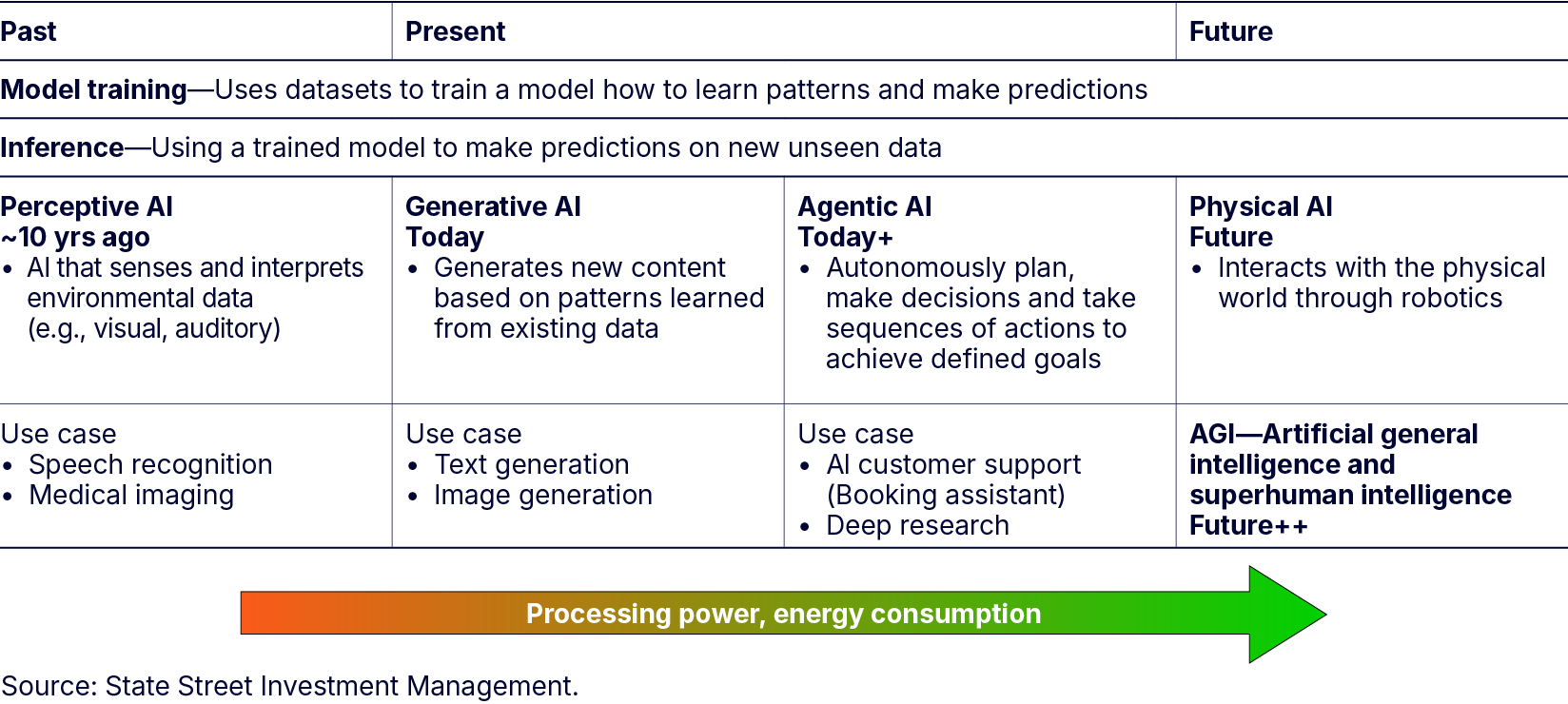

Understanding the four phases of AI: Perceptive, generative, agentic, physical

Practical applications of AI have been around for many years. Examples include speech and medical imaging recognition. Today, through applications like Microsoft Copilot, content is created through what is known as generative AI.

There are four main phases of AI’s evolution: perceptive, generative, agentic, and physical.

- Perceptive AI: Systems that can sense and interpret data—such as images, audio, and text. This phase includes technologies like image recognition, speech-to-text, and anomaly detection. It enables machines to “see” and “hear” the world through data

- Generative AI: Models that can create new content based on learned patterns. This includes text generation, image synthesis, music composition, and code writing. Generative AI powers tools like ChatGPT and DALL·E

- Agentic AI: AI systems capable of reasoning, planning, and taking autonomous actions toward goals. These agents use techniques like chain-of-thought reasoning and multi-step inference to operate with minimal human input, managing tasks and adapting to feedback

- Physical AI: AI integrated into real-world systems such as robotics, autonomous vehicles, and smart manufacturing. These systems combine perception, generation, and agency with physical interaction, requiring real-time decision-making and substantial compute power

Earlier stage but increasingly in focus is agentic AI, which is similar to an assistant using AI to reason and produce output. Further into the future is physical AI, and ultimately, artificial general intelligence (AGI) and artificial super intelligence (ASI), which parallel and exceed human thought, respectively.

Why the evolution of AI matters for investors

Each phase of AI is increasingly demanding compute power and energy. Efficiencies enabled by the next generation of NVIDIA chips and software enhancements like DeepSeek have only sped up the development process driven by the consistent compute needed for these tasks. This phenomenon is known as Jevon’s Paradox and has numerous precedents, the principle that when technology makes a resource more efficient, consumption of that resource goes up, not down.

Figure 5: AI evolution and Jevon’s Paradox

Physical AI’s promise to demand even more power and compute gives us confidence in the long-term sustainability of infrastructure demand and the AI buildout.

As AI progresses, each stage marks a deeper integration of intelligence, unlocking new capabilities and reshaping the landscape of innovation and investment. Perceptive AI gave machines the ability to interpret the world, laying the groundwork for automation in data-rich domains. Generative AI expanded that foundation by enabling machines to create. Agentic AI takes a step further, introducing autonomy and decision-making, where systems can act on behalf of users or organizations, transforming workflows and operational models.

Finally, physical AI brings intelligence into the real world, embedding cognition into machines that move, manipulate, and interact with their environments. Each phase doesn’t just add functionality—it demands new infrastructure and opens up investment opportunities across hardware, software, and services.

The new AI stack: Models, ecosystems, and the race for scale

As AI systems grow more capable, they’re reshaping the technology stack from the ground up. Foundational models now serve as the base layer, powering a rapidly evolving ecosystem of tools, platforms, and applications. Around them, new infrastructure is emerging to support training, deployment, and orchestration at scale.

What are LLMs and why do they matter?

LLMs are advanced AI systems that power applications ranging from chatbots to data analytics—and even play a role in autonomous technologies and robotics. They work by processing vast amounts of text to understand and generate human-like language, enabling computers to perform tasks that require reasoning and communication. Many companies are investing heavily and racing to develop increasingly powerful LLMs return.

ChatGPT (OpenAI), Claude (Anthropic), Gemini (Google), Llama (Meta), DeepSeek (High-Flyer Capital based in China), Grok (xAI/Musk) are some of the more notable ones. Recently, Anthropic raised $13 billion, valuing the company at $183 billion—and they are projecting $70 billion in revenue by 2028.22

OpenAI recently completed a secondary stock sale that valued the company at approximately $500 billion.23 This makes it the most valuable private company in the world, as of October 2025. For early-stage companies with a wide range of competitors and potential outcomes, these are staggering numbers.

At this stage, it is unclear how all these companies will monetize the value of their LLMs. Paid subscriptions by users is one way, and services or applications leveraging the model are another. But LLMs are important because they are driving a new wave of economic productivity by automating tasks that rely on language, reasoning, and decision making.

The question becomes: Will these revenue sources be enough to justify the massive investments each company is making? Microsoft announced plans to spend $80 billion on AI infrastructure in FY2025 alone.24 A significant portion of this is for building AI-enabled data centers for training and deploying AI models, including OpenAI's. The Stargate Project, a joint venture involving OpenAI, Microsoft, and other partners, was announced, with plans for a $500 billion AI infrastructure system. This project alone averages an annual spend of $125 billion over four years.

Oracle also surprised markets with a massive $455 billion backlog in Q1 FY2026—a 359% year-over-year jump—driven by four multi-billion-dollar AI infrastructure deals with major clients.25 All of these datapoints support the notion of an AI arms race.

Why AI ecosystems will capture more value than models alone

While each model has unique advantages, they may ultimately be commoditized, with the value potentially captured from applications that leverage the data in LLMs, in conjunction with proprietary data not captured in the LLMs. Some liken them to enablers like electricity or internet access. Companies like Meta, Google, Microsoft, and Amazon have a large suite of products that will leverage LLMs in conjunction with existing client relationships, workflows, and data, that may give them a competitive advantage relative to the standalone LLM companies.

Similarly, software companies are advancing their services through applications that sit on top of externally developed LLMs, in conjunction with proprietary company data that they organize and store via customer relationships. Independent LLM developers will need to either view these companies as customers or compete directly with them, but likely not both.

The case for long-term investment in AI

From accelerating productivity to enabling breakthroughs in healthcare, finance, and logistics, AI’s transformative potential is vast. Yet, realizing this promise requires patient capital and a strategic horizon. Short-term gains may capture headlines, but the true value lies in sustained investment that fuels innovation, infrastructure, and talent—positioning investors to benefit from exponential growth as AI becomes deeply embedded in the global economy.

Why AI efficiency drives more demand, not less

The more efficient technology becomes, the greater the capability to do things that were not possible before. This self-reinforcing cycle, known as Jevon’s Paradox, was first observed in the 19th century when improvements in steam technology made coal use more efficient—paradoxically driving even greater coal consumption. Similarly, technology continues to build on itself, leading to capabilities and investment for all industries.

The bottlenecks: Compute, energy, and talent

Improvements in how AI systems operate and scale are necessary given bottlenecks that potentially could slow AI’s development. AI is power hungry. Electricity consumption by data centers quadrupled from 2005 to 2024 and is forecasted to quadruple again by 2030.26 Some estimates say that electricity demand from data centers worldwide is set to more than double by 2030—and AI-optimized data center demand is set to more than quadruple—to around 945 terawatt-hours (TWh) by 2030.27 That’s slightly more than the entire electricity consumption of Japan today.28

Figure 6: The explosive growth in energy demand from data centers

Projected increase in electricity demand from AI-optimized data centers by 2030 29

Projected share of global electricity demand growth attributable to data centers (including AI) between now and 2030 in advanced economies 30

Projected electricity demand from data centers worldwide by 2030 31

Renewables are adding much of the new power, but data centers still need steady base-load capacity from natural gas and coal, which is shrinking as coal plants retire. Grid operators are flagging risks of blackouts and power prices are rising sharply because of tight supply. AI server and data center energy demand may require as much as 720 billion gallons of water annually.32 Other resources like copper and rare earth materials are also seeing increasing strategic importance.

These increases come despite the unprecedented advances by NVIDIA delivering next generation semiconductors that exponentially increase processing power at ever greater power efficiency levels. Even semiconductors themselves are bumping up against Moore’s law limitations that AI will need to solve.

These bottlenecks are driving investment and opportunities across sectors. Companies building data centers, advanced chips, renewable energy systems, grid upgrades, and cooling technologies are essential to overcoming these constraints. Investing in these areas can capture long-term growth tied to AI adoption. Lastly, Meta’s recent high-profile hiring spree—poaching top talent at astronomical costs—underscores the intensity of the AI talent war and the enormous stakes involved.

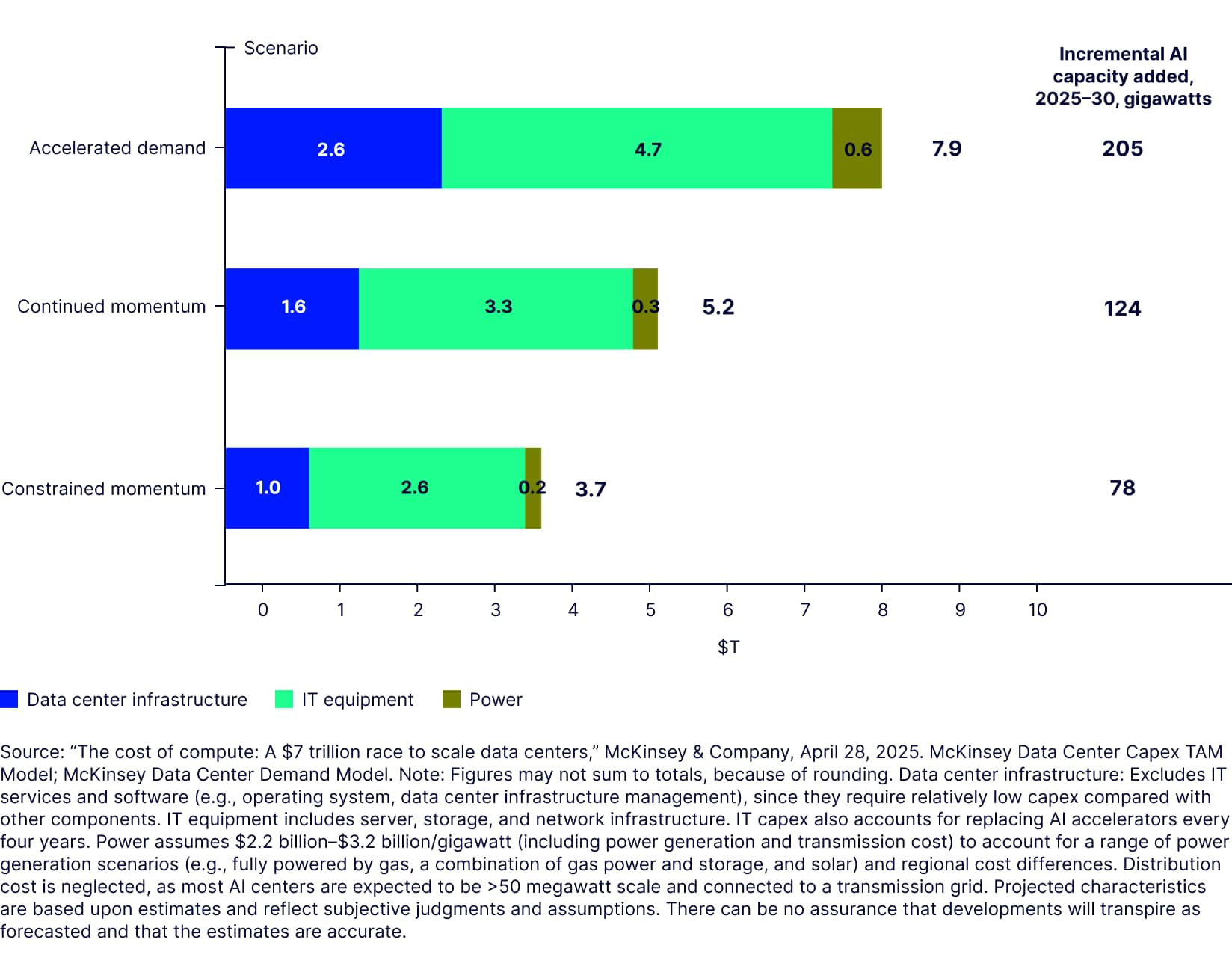

What the market may be missing about AI’s growth trajectory

AI could drive nearly $8 trillion in investment by 2030, with the bulk of spending geared toward IT equipment and Data Center Infrastructure.33 Capital expenditures (CapEx) for AI hyperscalers are estimated to approach over $520 billion in 2026.34 Governments are also racing to invest and build infrastructure to achieve technological supremacy.

The stakes are enormous

The implications are enormous, potentially bifurcating countries and regions, much like companies, into clear winners and losers. For example, countries that supply cheap labor may find it difficult to compete with robotics and automation. In turn, that could lead to lower economic growth and political uncertainty. Many jobs will no longer exist, so retraining and creating new sources of employment may be needed. Resources and infrastructure will increase in importance to enable the full power and opportunities of AI.

Economic and political ripple effects

Differences in productivity rates between countries will impact inflation rates, employment and economic growth, currencies, fiscal and monetary policies and every other economic indicator—and thus political agendas and elections. We’re already seeing these issues in today’s geopolitical landscape, and they’re likely to grow more intense as the stakes rise.

Who’s leading—and who’s catching up?

Countries that are leading and prospering today include the US, Taiwan, China, and Korea as examples. The Middle East, particularly the Gulf nations (UAE, Saudi Arabia, Qatar), is rapidly positioning itself as a global AI hub, forming partnerships and leveraging its vast energy resources and capital. India faces high stakes: a huge population, skilled workforce, and a chance to modernize fast. But it trails the US and China in AI R&D and must move beyond low-cost manufacturing.

Europe risks falling further behind other regions in economic growth as AI adoption lags in comparison to other developed regions. While there are many globally competitive European companies, the region touts its strong AI regulatory leadership which threatens to limit its AI progress—and its base of technological know-how is already lagging. There are many other factors that determine a country’s outlook and growth trajectory, but AI is a significant enough threat and opportunity that countries can ill afford to lose.

Figure 7: Possible scenarios for AI-driven investment

Global data center total capital expenditures driven by AI, by category and scenario, 2025–2030 projection, $T

If history is any guide, we may be only at the beginning of AI’s economic transformation. E-commerce shows how transformative technologies keep evolving long after their introduction. More than 25 years later, the e-commerce competitive landscape continues to shift, with ripple effects across real estate, payment systems, and supply chains. The investment horizon for technological change is often underestimated.

How AI is reshaping key sectors of the economy

From finance and healthcare to manufacturing and logistics, AI is driving efficiency, unlocking new capabilities, and reshaping competitive dynamics. These changes aren’t incremental; they signal a structural shift that will redefine how industries operate and create value. AI has transformational prospects for all sectors and across industries.

Semiconductors: From cyclical to structural growth?

Semiconductors are at the heart of AI infrastructure development. Historically, it’s been a volatile sector with lower valuation multiples than other growth areas, mainly because their profits swing with consumer demand (PCs, phones, autos) and the ups and downs of semiconductor inventory cycles.

But as AI’s penetration into all aspects of the economy grows, this should moderate, supported by that structural growth. Data center graphics processing units (GPUs) used in data center servers, based on our estimates, are forecast to grow to a $1T total addressable market in 3-5 years from around $100 billion in 2024.35 NVIDIA dominates the space with AMD making progress. Application specific integrated circuits (ASICs) are designed for specific AI workloads and used mostly for inferencing. These are earlier stage than GPUs and are expected to grow rapidly from $11 billion in 2024 to $270 billion in 3-5 years.36

Supporting the development of these products and their advancing capability are the production equipment suppliers and foundries that produce the chips. Taiwan Semiconductor Manufacturing Company (TSMC) is the dominant global foundry and many of the other suppliers compete in oligopolistic industry structures. There may be a steady increase in semiconductor production equipment demand to support additional capacity increases, but also to assist with customer product roadmaps and productivity. Rising margins and returns on investment support the higher multiple thesis. Even memory suppliers, the most commoditized area in semiconductors, still trade at reasonably low P/E multiples, despite the explosion in demand from AI.

Despite the performance of TSMC, Micron, and SK Hynix, the stocks are still priced attractively.

Figure 8: Valuation and performance metrics for select semiconductor and memory companies

| Consensus P/E FY’26 | Consensus P/E FY’27 | 2025 returns through December 12, 2025 | |

|---|---|---|---|

| Micron | 12.5 | 9.9 | 207% |

| SK Hynix | 6.4 | 5.8 | 225% |

| Taiwan Semiconductor Manufacturing Company | 19.4 | 16.3 | 36.7% |

| Mag 7 | 27.1 | 28.4 | |

| SPX | 17.2% |

Source: Bloomberg Finance, L.P., as of December 12, 2025. Past performance is not a reliable indicator of future performance. References to specific company stocks should not be construed as recommendations or investment advice. The statements and opinions are subject to change at any time, based on market and other conditions. Mag 7 includes Broadcom instead of Tesla.

Health Care: AI in drug discovery and personalized medicine

Health Care should see a tremendous boost in productivity from AI. Rising healthcare costs are a challenge every country is facing, particularly in the United States.

US health care expenditures represented roughly 16% of its gross domestic product (GDP) in 2023, the highest among wealthy developed nations.37 Hospitals represent one third of this spend. This is unsustainable.

Paradoxically, drug discovery has steadily become more expensive and slower since the 1960’s. Eroom’s Law, which is Moore’s Law in reverse, shows that the cost to bring a new drug to market doubles every nine years. While the competitive implications are less clear, increased scientific understanding and capability will drive research productivity and streamline the number of drugs that go to trial, with better chances of success and reduced time to market, reversing the prior trend.

This could benefit pharmaceutical and biotechnology companies but have a negative impact on companies that provide tools and outsourced services to the industry.

Figure 10: Example of AI-powered progress in pharma

Pfizer (COVID-19 vaccine and PAXLOVID)

- Used AI and data analytics to review clinical trial data in just 22 hours after meeting the primary efficacy case counts38

- Used supercomputing to find the molecules that could deliver PAXLOVID in a pill form39

- Used AI to optimize analysis of supply chain data to identify, address, and monitor issues in the production of PAXLOVID40

Other trends include proactive patient care, enhanced diagnostics, robotic surgery, and a move to more personalized medicines. Report writing and regulatory filings will also see dramatic improvements through automation. Because of these factors, the sector is likely to become more profitable.

Economic implications could be enormous in terms of lower government spending on healthcare as a percentage of GDP, life expectancy, and other impacts to demographics and quality of life.

Energy and Industrials: Electrification and automation at scale

The increased demand for energy and electricity and the need for efficiency gains from AI is already becoming deeply rooted. Whether it's semiconductors, data centers, electrical infrastructure, or the impacts of AI and automaton on every sector, Industrials are benefitting from a massive capital expenditure cycle.

Industries that are benefiting include:

- Engineering and design

- Large rental equipment

- Electrical equipment

- Machinery

- Automation

- Robotics

In all cases, these firms are increasingly leveraging software and AI to enable smarter manufacturing and using simulations and digital twins in product development and testing. Many parts of the economy could see a big boost in productivity, especially in farming, food supply, and labor, which might reduce the need to outsource jobs to lower-cost countries. Rising labor productivity could reshape employment patterns, potentially reducing the need to outsource jobs to lower-cost countries. These drivers firmly reinforce the AI arms race between countries and the “winner takes all” dynamic.

Software and services: Productivity, stickiness, and pricing power

As investors weigh whether some companies might be disrupted by the very technology that drives the sector forward, software stocks have shown mixed results in the AI era. Hanging over the sector is the slow progress in monetization from some software AI offerings along with the threat that new AI solutions may automate certain employee tasks, improve efficiency, and reduce the number of software seats sold.

Furthermore, enterprise adoption of AI has been slow. Agentic AI, using agents or bots to automate and perform specific client tasks, is in its infancy. Software companies are only recently rolling out their proprietary offerings. Countless surveys indicate that enterprises are only beginning to ramp up AI adoption. To take advantage of AI’s capabilities, enterprise data needs to be completely redesigned and reorganized.

Given this backdrop, software companies that are focused on data and security have excelled with the balance of software companies lagging. The opportunity for enterprise software vendors comes from selling additional, high value solutions that can organize and utilize client data for customized solutions to improve efficiency. Software companies that are enterprise-focused, store their customers’ data, and provide a suite of solutions may be better positioned, but investors should assess each company on its own merits.

Figure 11: Software vendors are rapidly incorporating Gen AI technology in their solutions

| Company | Gen AI product | Commentary |

|---|---|---|

| Microsoft | GitHub Copilot |

|

| Microsoft | Microsoft 365 Copilot |

|

| Adobe | Adobe Firefly |

|

| Salesforce | Agentforce |

|

| ServiceNow | Now Assist |

|

| CrowdStrike | Charlotte AI |

|

Source: State Street Investment Management.

Companies that are simply a platform for users without integration of client data are already being disintermediated, but the negative narrative across software companies may be incorrect in our opinion. AI is likely to spur growth among many of these vendors rather than replace them as businesses adopt AI.

Communication Services: Social media and advertising impact

AI is already impacting social media companies, with some like Meta and Google investing heavily. Companies are using AI to improve the user experience in core products through increased targeting of content (video recommendations, improved search, aggregating proprietary data from users in a more intelligent way, etc.), which is likely to increase the effectiveness and returns on advertising dollars.

“One of the most powerful applications of AI in communication is audience segmentation and targeting. By analyzing large datasets from social media and web traffic, AI can identify distinct audience segments based on behavior, preferences, and demographics. This allows communicators to deliver more personalized and relevant messages.”

—Evan Kropp, Ph.D., University of Florida, “The Impact of AI on Global Strategic Communication”

Each company aims to expand its subscriber base by improving its customer’s experience. Revenue generation can also come from selling unique, proprietary data to the model developers through licensing deals. New products are also being created, such as vision lenses, and AI-enabled shopping assistants that understand the user’s intent and ultimately drive them to action.

Other impacts include automating marketing tasks and customer service, content creation, and improving platform safety and security. Important success factors will be:

- Scale

- Ecosystems

- Proprietary data

- Accuracy of AI generated results, and

- Overall strategic and operational execution

Financials and consumer: Emerging use cases and competitive moats

The Financial sector logically represents a large pool of use cases amidst a data-rich environment for AI. Early viable use-cases involve expense saving via fraud detection, cybersecurity, credit-scoring/risk management, and operational and customer service productivity.

AI is just starting to create new revenue opportunities—from smarter sales targeting and lower customer churn to new AI-powered products. Examples include increased personalization of banking and insurance services that analyze a customer’s complete financial picture, and autonomous financial agents that anticipate life events and proactively suggest products. But adoption still faces some key hurdles that can be particularly acute due to regulation in the sector, including:

- Data privacy

- Regulatory uncertainty

- Accountability

- Transparency

- Security, and

- Need for skilled employees

Many companies may also have to run old and new systems at the same time as they build out their AI-powered platforms and services. Like other industries, companies with a data advantage are in a strong position.

Overall, adopting advances in AI is crucial for the sector, and it will likely be evolutionary rather than revolutionary. From an investment thesis perspective, AI is clearly a factor that could drive some idiosyncratic investments, but macro drivers tend to overshadow tech improvements when it comes to performance of Financial stocks.

Figure 12: From chatbot to checkout, retail is running on intelligence

The percentage of consumers that think retailers should use AI to improve shopping experiences 41

The percentage of consumers that are using AI tools in their path to purchase at least occasionally 42

AI applications will continue to leverage consumer data to drive customer engagement and fundamentally alter the consumer’s purchase journey. Examples include personalized recommendations, interactive shopping experiences, and growing loyalty through ongoing engagement. Like most industries, all companies will be forced to invest in AI to develop these capabilities.

While most use cases are oriented towards driving revenue, other improvements are also being targeted. Productivity, marketing, R&D, and product development should also benefit. Consumer companies with direct relationships with customers and proprietary datasets may be better positioned to benefit from AI. While still early stage, developments are rapidly advancing.

Risks and constraints in the AI investment landscape

Artificial intelligence presents opportunities for investors, but its rise comes with risks and constraints. Investors must navigate a complex and evolving landscape. Understanding these challenges is essential for making informed investment decisions.

What could go wrong? Overbuild, regulation, and energy limits

While the AI investment theme may be durable long term, there are several risks to be aware of. So far, hyperscalers (Amazon Web Services, Microsoft Azure, Google Cloud, and Oracle Cloud Services), along with Meta and the numerous independent LLM companies, continue to increase CapEx to win the AI arms race. Likely, all companies will not earn an adequate return to justify their investment.

There is likely to be a shakeout, followed by consolidation, and then secondary innovations as the market continues to develop. The 2000 internet bubble is a useful comparison for any potential shakeout, when most websites failed—though there are some differences.

The primary differences are:

- The bulk of spending is supported by cash flow generation, though debt levels are increasing along with vendor financing and circular investments potentially propping up demand

- The business models of the hyperscalers are superior to almost all the dot-com era companies

- There are far fewer LLM companies than there were websites created

Additionally, AI models are likely to retain significant residual value, unlike the worthless websites from the dot-com era. However, consolidation may trim spending and demand for AI infrastructure. At the same time, new cloud hosting entrants such as Oracle and CoreWeave could spark a price war and possibly even consolidation among major AI chip buyers.

Other risks include restrictions on countries’ ability to purchase semiconductors, like we have seen with China. Given rising geopolitical tensions, partly from AI’s defense and security implications, any number of risks could materialize. Ultimately, any of these setbacks may be a long-term buying opportunity, as the shift to the cloud still has a long runway supporting the need for further investment.

Another risk that could suggest an eventual oversupply of infrastructure is if future applications like AGI, robotics, and other real-world applications are further out than anticipated. Expectations aren’t currently high, so the risk of this may be low. Additionally, enterprise adoption of AI is in its infancy. So is Edge AI where AI models run directly on devices such as internet of things sensors, cell phones, and robotics. Generative AI has a long runway for growth, so the future stages of AI development are not needed in the immediate future.

Lastly, bottlenecks in other areas that support the AI growth story could cause short-term reductions in demand. Power consumption is forecast to grow dramatically, and consumer prices are already increasing. Power grids are already constrained, and expensive upgrades are required. There could be a consumer backlash and regulators, historically slow to approve permits for new capacity, may not move quickly enough to enable new supply.

Further limits on advanced chip manufacturing—mostly in Taiwan—could be disrupted by natural disasters, supply chain issues, or geopolitics. Memory is also critical in the buildout, and most of it is controlled by a few major players who are cautious about overinvesting after past cycles of excess. The AI supply chain is also incredibly complex, posing any number of risks.

Other risks center around a broader economic slowdown or credit cycle that forces cost cutting by enterprises, impacting the advertising cycle in particular, which supports a high percentage of the cash flow being invested in AI CapEx. Ethical concerns around false or misleading information, and hallucinations (producing false or nonsensical information that is presented as factual), could trigger regulatory uncertainty or a pause in investments.

If supply temporarily exceeds demand, it could trigger a sharp selloff given today’s investor positioning and growth expectations.

Positioning for the intelligence economy: What comes next

As model training plateaus, progress shifts towards inference—the deployment of models to generate answers and new data. Training (for models) itself is an iterative process, repeatedly feeding new data and feedback into the model. As applications are built on top of these models, the iterative process continues and constantly evolves.

Greater intelligence generates increased capability, which generates more data to feed the model which then generates new capabilities (quantum computing, as an example) and the cycle continues in perpetuity. The growth rate of data is accelerating, and this trend is expected to continue for the foreseeable future. Efficiencies and coding breakthroughs only serve to speed up this process. This is how we ultimately get to Physical AI, to AGI, and even ASI, a hypothetical intelligence that far surpasses human cognitive capabilities. If the implications of building websites were significant, the implications for AI are exponentially more significant and endless.

Perspective is important. While AI is developing rapidly and predicting the future is always uncertain, AI is a powerful force in markets and in our lives—and we are early in the journey. As technology continues to build on itself, adoption and change happen quicker and more pervasively, creating a rapidly evolving investment environment that, at times, is difficult to navigate. It is also a landscape that has greater risks and rewards for companies, investors, and countries alike. Continuous learning, being open-minded, and adjusting along the way are crucial to navigating the path forward.